The Strasbourg Observatory is in charge of the processing of the data of the SVOM mission MXT camera .

The Mission

The SVOM mission (see https://www.svom.eu/ and more ) is a Franco-Chinese mission dedicated to the study of the most distant explosions of stars, the gamma-ray bursts. The mission includes 4 main instruments aboard a satellite in low-earth orbit (ECLAIR and GRM in hard X-rays and gamma rays, MXT in soft X-rays, and VT in visible) as well as dedicated ground telescopes for the detection and follow-up of the gamma-ray bursts detected from the satellite, using alerts transmitted via a continuous link with VHF antennas. SVOM has been successfully launched in 22 June from the Xichang base.

Like other GRB missions (e.g. Swift or Fermi ), the management of SVOM alerts will be assigned to Burst Advocates who will survey all activities connected with the alert, act as points of contact for the non-SVOM world, and eventually take decisions about the follow-up of the source (get more)

The Strasbourg Contribution

Strasbourg Observatory is part of the French Science Consortium (10 labs led by the CEA) which is in charge of delivering and operating the ground software for the French payload. We are responsible for the design, the development, and the support of the MXT software. This provides calibrated data and science products for all operating modes and scientific programmes of SVOM, i.e. GRB and non-GRB science, including counterparts to multi-messenger sources (gravitational waves and neutrinos).

The MXT camera

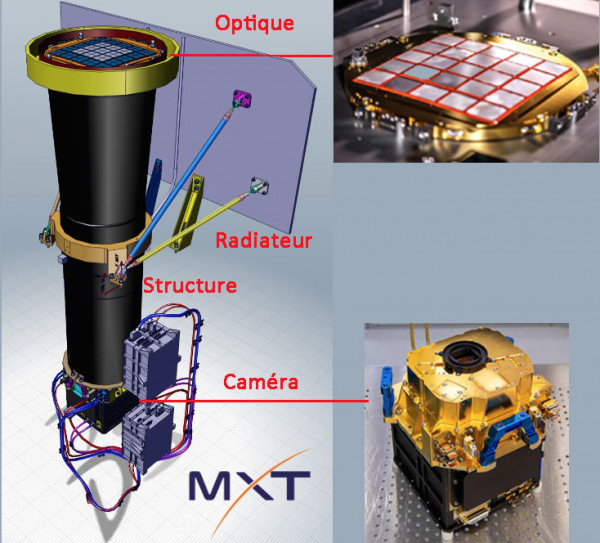

In response to the alert transmitted by ECLAIRs, the MXT (Microchannel X-ray Telescope) will observe the gamma-ray burst in the soft X-ray range (energy between 0.2 and 10 keV), in particular at the very beginning of the afterglow. It is developed in France by CNES and CEA-Irfu, in close collaboration with the University of Leicester in the United Kingdom and the Max-Planck Institut für Extraterrestische Physik of Garching in Germany.

The MXT has a lobster eye (glass micro channels) optics at the entrance of the telescope with a diameter of 24 cm and a weight of 2 kg. The structure with a focal length of 1.15 m is made of carbon fiber with the camera at the focus. The complete set weighs 35 kg.

The Processing Pipeline

The pipelines we developed for MXT (see flowchart below for an example) will run a sequence of independent tasks, downloading first necessary input files from the scientific database (SDB) and instrument configuration and calibration files (CALDB). The input data is then calibrated and used to produce a set of science products related to the observations (e.g. images) and to the sources detected in the field of view, such as energy spectra and light curves. All the created products are stored in the SDB and sent to the observers when needed.

The Simulation Bench

To guide the development of our pipeline tasks, validate them, and identify problematic cases prior to the operation phase, we have also designed a simulation pipeline, producing realistic data accounting for telescope and detector effects.

The simulation bench aims at testing the workflow and functionalities of the SVOM-MXT pipeline developed in Strasbourg. Thousands of simulations (Monte-Carlo method) mixing both the characteristics of pointing (position, exposure time,…) and those of the studied objects (number and position of sources in the field of view, flux, spectral characteristics, variability,…) are generated and then injected into the pipeline. The values calculated by sophisticated algorithms are then automatically compared to the input values, thus allowing to qualify the pipeline quality, the relevance of the returned values but also to easily isolate problem cases that could be encountered during the operational phase and to make the necessary corrections.

During the development phase, the simulation bench runs H24 on a huge variety of input source(s) in order to detect as much as possible patterns that make the pipeline failing.

The Interoperability Framework

The Strasbourg Observatory also leads the design and development of the mechanisms (schemas and tools) ensuring a good interoperability level for the different modules of the ground segment infrastructure. For this purpose, we provided the consortium with a complete workflow for managing the science product data model. We also defined a common vocabulary for all messages exchanged by different stakeholders and finally we designed a common control interface used by most of the processing pipelines. Finally all of our products are delivered with a VO-compliant interface.

Message Schema

The French ground segment infrastructure covers a wide range of activities, from the data unmarshalling to the science analysis. It also includes the processing of all the instrument-related data. All of these modules, running in a Kubernetes cluster hosted by the CCIN2P3, have been developed by different teams. The basics of this distributed infrastructure if that any modules can both write and listen to a messaging stream (NATS) and can be controlled through REST APIs. A module can monitor another one by listening NATS. It can trigger actions on that other module by invoking some REST endpoints or it can get e.g. a processing report or status by invoking other endpoints. A module can also decide by its own to operate some action after having read a specific message on NATS.

Although many programming rules have been stated for the project, the components of this distributed architecture have a certain level of heterogeneity. In this context, it was crucial to put an important work on the interface definitions to make all of these modules working together. This topic has been raised and then managed by the Strasbourg team. The solution (see ADASS paper) we developed is based on JSON schemas that constrain the vocabulary, the structure of the messages that are exchanged as well as the description of REST endpoints (open API) of most of the processing modules. These schemas are managed on a GIT repository that is used as a common reference.

Product Format

Another major issue is to manage the product formats (list of keywords, allowed vocabulary…) in a way that any individual product cab be retrieved, reprocessed and compared with some other instances. This suppose a very fine grain description of all product all along of the mission. Any change of any science file must be notified to all modules using it (producer, consumer, storage).

This is achieved by using individual JSON product descriptors. These descriptors are used by the database (SDB) to validate (accept or reject) the product ingestion; they can be used by pipelines to check whether generated files are compliant or not. These descriptors are managed on a GIT repository which also proposes a complete validation suite. Any change must be validated by a committee that prevent unwanted side effects of not controlled modification.

VO Interface

The Virtual Observatory (VO) is the vision that astronomical datasets and other resources should work as a seamless whole. Many projects and data centers worldwide are working towards this goal. The International Virtual Observatory Alliance is an organization that debates and agrees the technical standards that are needed to make the VO possible. It also acts as a focus for VO aspirations, a framework for discussing and sharing VO ideas and technology, and body for promoting and publishing the VO (courtesy of IVOA) .

MXT science products include each a VO extension that will facilitate their publication in VO-compliant resources and their interoperability with products from others mission. These VO extensions are 2 folds: 1) a set of Obscore keywords that describe the coverage along of the space, time and energy axes and 2) a JSON serialization of the product provenance that allows the reconstruct the processing chain at the origin of the product.